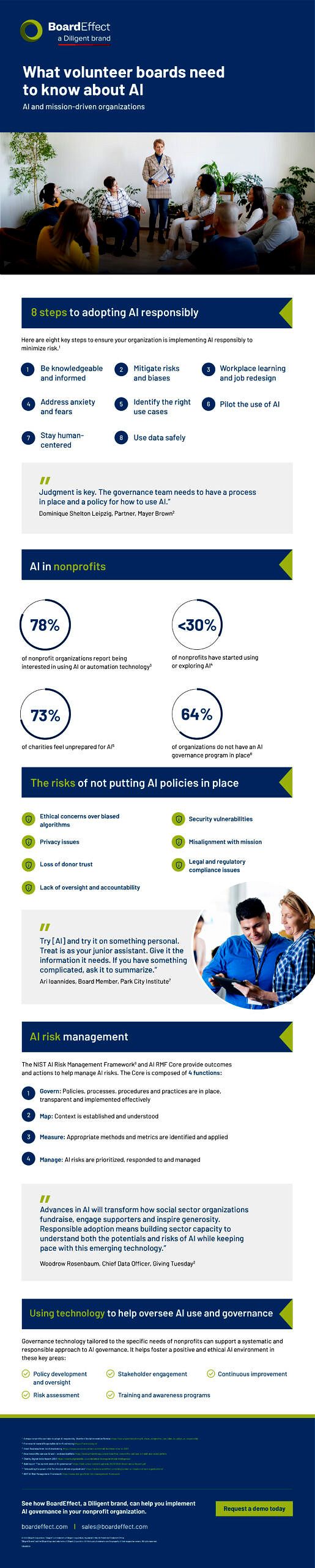

What volunteer boards need to know about AI

Nonprofit leaders and boards recognize that AI is going to expand to even more uses, and new AI applications beyond ChatGPT will continue to emerge at full steam. To ensure AI is used responsibly within their organizations, these leaders must take steps to understand AI and how they can institute governance policies to minimize its risks.

AI, once thought to be a trendy technology — perhaps even short-lived — is clearly here to stay. Not a day goes by without a news story or article about machine learning’s impact on people, companies, governments and mission-driven organizations.

While the advantages of machine learning and deep learning are numerous and well-documented, they are not without skepticism. Is ChatGPT biased? Are digital assistants retaining access to personal information? Do Chatbots enhance the customer service process? Will AI really assist with an organization’s fundraising campaign?

What you’ll learn

- How to adopt AI responsibly

- Latest stats on AI and nonprofits

- The risks of not putting AI governance in place

Our latest infographic looks at the role of AI in nonprofits, how it can be adopted responsibly, and the risks of not creating policies to oversee and govern its use.

Download our infographic in shareable formats here : PDF, JPEG and PPT.

“Advances in AI will transform how social sector organizations fundraise, engage supporters, and inspire generosity. Responsible adoption means building sector capacity to understand both the potentials and risks of AI while keeping pace with this emerging technology.” – Woodrow Rosenbaum, Chief Data Officer, Giving Tuesday

8 steps to responsible AI adoption

The Stanford Center on Philanthropy and Civil Society explores eight key steps to adopting AI skillfully and with care. These steps provide a useful framework for nonprofit leaders and board members who see the potential value of AI, but are hesitant to give the full go-ahead.

- Be knowledgeable and informed. Take time to understand what AI can and can’t do. Learn how your staff plans to use features like ChatGPT or Chatbots. Anticipate outcomes and risks before launching full-scale.

- Address anxieties and fears. A common fear is that AI will eliminate jobs. If ChatGPT can write a 600-word blog, why retain a copy writer? Leaders can calm employee anxieties with straightforward and honest discussions. Jobs may change in the future, but AI is not likely to eliminate jobs according to the World Economic Forum .

- Stay human-centered. Assure your team members that humans will always oversee technology and make the final call on critical decisions.

- Use data safely. This may seem obvious, but privacy and permission are especially important in nonprofits. Do we have permission to use written materials from authors discovered in AI searches?

- Mitigate risks and biases. Design and implement a methodology to ensure that AI tools are safe and unbiased. Anticipate worst-case scenarios before they occur.

- Identify the right use cases. Nonprofit leaders know how time-consuming certain tasks can be, particularly during fundraising seasons. Think about the initiatives that are not getting done because of necessary (but repetitive) jobs. AI automation can help by allowing more time for more essential activities.

- Piloting the use of AI. Test and retest. Before deploying a full-blown AI application, create a small, short-term test and let your team members evaluate the results. Were they accurate? What impact did the AI application have on their jobs and time?

- Job redesign and workplace learning. Be prepared to refresh job descriptions and provide skills training as AI becomes the norm. Produce and circulate an AI handbook with best practices and guidelines unique to your nonprofit.

“Try [AI] and try it on something personal. Treat is as your junior assistant. Give it the information it needs. If you have something complicated, ask it to summarize.” – Ari Ioannides , Board Member, Park City Institute

AI in nonprofits

Nonprofits are seeing the upsides of using AI tools in their organizations. Yet various reports and surveys suggest that while the interest is growing rapidly, the knowledge gap is great. Constant Contact’s Small Business Report reveals that 78% of nonprofit organizations report they are interested in using AI or automation technology.

However, Nathan Chappell of Donor Search states that fewer than 30% of nonprofits have started using or exploring AI. The Charity Digital Skills Report 2023 reports that of the 100 respondents in a flash poll about AI, a majority (78%) think that AI is relevant to their charity, but 73% feel unprepared to respond to the opportunities and challenges AI brings.

Taking a broader look at AI governance policies across industries and in both public and private sectors, Babl AI Inc ., using a three-pronged methodology (literature review, surveys and interviews), found that 64% of organizations do not have an AI governance program in place.

“Judgment is key. The governance team needs to have a process in place and a policy for how to use AI.” – Dominique Shelton Leipzig , Partner, Mayer

The risks of not putting AI policies in place

It is easy to be enticed by the advantages of AI. Who wouldn’t want to use a lightning-speed application to research giving trends from major donors? Or launch a fundraising newsletter in hours rather than days? While the benefits are many, so too are the risks without organizational policies and procedures to govern AIHere are some of the key risks of not putting governance in place:

- Ethical concerns over biased algorithms. AI algorithms may be biased, and when they are, they can further validate prejudice and inequality.

- Privacy issues. The protection of personal and institutional data is fundamental with the proliferation of deep fakes, disinformation and hate speech.

- Loss of donor trust. When donors lose trust in nonprofit organizations for any reason, including the misuse of AI, regaining that trust and financial support can be impossible.

- Security vulnerabilities. Cybersecurity incidents can derail nonprofits wreaking havoc on operations, call centers and data management.

- Misalignment with mission. Mission-driven organizations must do everything possible to safeguard their reason for being. Unchecked AI that produces divergent points of view or goals can hinder this.

- Legal and regulatory compliance issues. Nonprofits are bound by legal and regulatory compliance requirements. As AI continues to permeate every aspect of society, more regulations will emerge requiring organizations to comply.

- Lack of oversight and accountability. The possibility of chaos from data security breaches to flawed decisions and consequences — all based on unverified AI information — can ruin a nonprofit without accountability and oversight.

AI risk management

The demand for AI oversight and risk management has been heard globally acrosssectors. To address this vital need, the U.S. Department of Commerce’s National Institute of Standards and Technology (NIST) has released the Artificial Intelligence Risk Management Framework (AI RMF). The framework, developed in collaboration with both the public and private sectors, is a roadmap for the safe adoption of AI technologies. It gives organizational leaders and board members the tools to better understand and manage the risks of AI technologies.

Here’s a look at the four core functions in the AI RMF.

- Govern: Organizations need AIpolicies, processes, procedures and practicesthat are not onlyin placebut aretransparentandimplemented.

- Map: Context is established and understood. How AI will be deployed, the potential benefits and risks, and organizational expectations are addressed in this function.

- Measure: Appropriate methods and metrics are identified and applied. These tools are intended to assess and document AI risks and evaluate the controls designed to mitigate risks.

- Manage: AI risks are prioritized, responded to and managed.Once risks are identified in the other core functions, organizations prioritize and determine how best to respond. Can they adequately address the risks and continue? Or should the AI application be suspended?

Using technology to help oversee AI

Governance technology tailored to the distinct needs of mission-driven organizations, including nonprofits and those focused on social and environmental causes, can support a systematic and responsible approach to AI governance. As we have noted, while 78% of nonprofit organizations are interested in using AI, fewer than 30% have started to explore or use it.

BoardEffect , a Diligent brand, supports mission-driven organizations. Our board management software streamlines board processes, enhances communication and promotes accountability. This helps volunteer boards support their organization’s leaders as they develop points of view about AI and governance. BoardEffect provides a foundation for leaders and boards to promote a positive and ethical AI environment in key areas:

- Policy development and oversight

- Risk assessment

- Stakeholder engagement

- Training and awareness programs

- Continuous improvement

Whether it’s implementing, reviewing or updating AI polices or training board members on the value and risks of AI use, BoardEffect allows nonprofits to do so in a secure platform with optimal control.

See how BoardEffect, a Diligent brand, can help you implement AI governance in your nonprofit organization.

Take advantage of intelligent AI tools built specifically in BoardEffect to support the work of governing boards, including features such:

- AI Board Book Summarization which allows you to generate a detailed summary of your board books with a single click, providing refined, actionable insights from your meeting materials.

- AI Meeting Minutes and Actions which generates relevant, high-quality meeting minutes with actions built from board materials, typed notes and transcripts. Articulate key takeaways, and track decisions and actions.

Request a demo today.

Ed is a seasoned professional with over 12 years of experience in the Governance space, where he has collaborated with a diverse range of organizations. His passion lies in empowering these entities to optimize their operations through the strategic integration of technology, particularly in the realms of Governance, Risk, and Compliance (GRC).

PREVIOUS POST :

Tech-powered strategies to track and achieve your DE&I goals